Protect workloads in Kubernetes with mTLS, SPIFFE/SPIRE, and Cilium

Introduction While an organization is gradually moving the development of “monolithic” applications toward distributed microservices, several new challenges are emerging. We keep hearing about how can microservices (“workloads”) should safely discover, authenticate and connect on potentially unreliable networks. Resolving this issue by workload, although it is possible, is unmanageable for a software developer and increasingly […]

Reading Time: 5 minutes

Table of Contents

Introduction

While an organization is gradually moving the development of “monolithic” applications toward distributed microservices, several new challenges are emerging. We keep hearing about how can microservices (“workloads”) should safely discover, authenticate and connect on potentially unreliable networks.

Resolving this issue by workload, although it is possible, is unmanageable for a software developer and increasingly difficult to manage with more workloads. Instead, large and small organizations are starting to use proxies (such as Envoy) to manage discovery, authentication, and encryption for a workload. When workloads connect in this manner, the resulting design model is popularly described as a service mesh.

The issue arises when the services need to communicate securely. To do so the services authenticate themselves by generating an X.509 certificate associated with a private key. Doing this manually is sufficient for simple scenarios, but it’s not always scalable.

One great way to remove this overhead and scale the security issue will be to use SPIRE, which provides fine-grained, dynamic workload identity management. SPIRE provides the control plane to provision SPIFFE IDs to the workloads. These SPIFFE IDs can then be used for policy authorization. It enables teams to define and test policies for workloads operating on a variety of infrastructure types, including bare metal, public cloud (such as GCP), and container platforms (like Kubernetes). We will be coupling CIilium with SPIRE to demonstrate this scenario.

The Scenario Setup

The scenario’s purpose is to upgrade the connection between carts and front-end pods from HTTP to HTTPS. To enhance the connection, a Cilium Network Policy (CNP) will be used.

To try this, we’ll clone the cilium-spire-tutorials repo from the KubeArmor repository. We use a GKE cluster and the sock-shop as our sample application.

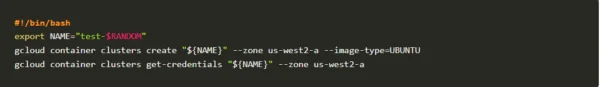

1. Create a cluster under the GCP project

2. Deploy the sample application

kubectl apply -f

https://raw.githubusercontent.com/kubearmor/KubeArmor/main/examples/sock-shop/sock-shop-deployment.yaml

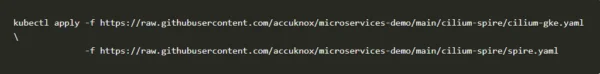

3.Deploy manifest (cilium-control-plane + spire-control-plane + dependencies).

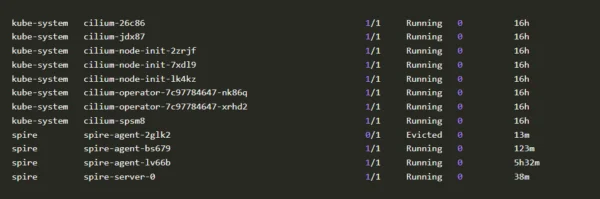

Note: Check the status of all the pods. The spire-control plane (spire-agent and spire-server) should be running as well as the cilium-control plane.

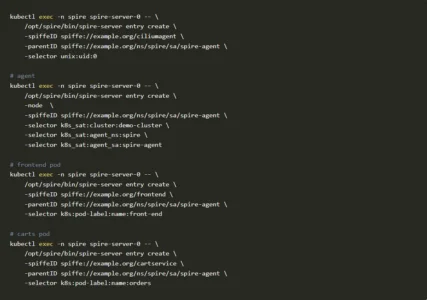

Configuring SPIRE

A trust domain represents workloads that implicitly trust each other’s identity papers, and a SPIRE Server is bound to it. I’m going to use example.org as an example.

SPIRE employs an attestation policy to determine which identity to issue to a requesting workload for workloads within a trusted domain. These policies are usually characterized in terms of

- The infrastructure that hosts the workload (node attestation), and

- The OS process ‘hooks’ that identify the workload that is operating on it (process attestation).

For this example, we’ll use the following commands on the SPIRE Server to register the workloads (replace the selector labels and SPIFFE ID as appropriate):

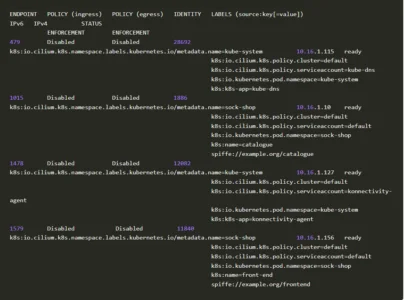

We’ll check the endpoint list in cilium to make sure that a SPIFFE ID is assigned to the pods

Perfect! everything is attached nicely. Let’s go ahead and create a policy that will upgrade the egress connection from the carts pod from HTTP to HTTPS and does the vice-versa in the front-end pod.

— apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: frontend-kube-dns

spec:

endpointSelector:

matchLabels:

name: front-end

egress:

– toEndpoints:

– matchLabels:

io.kubernetes.pod.namespace: kube-system

k8s-app: kube-dns

toPorts:

– ports:

– port: “53”

protocol: UDP

rules:

dns:

– matchPattern: “*”

—

apiVersion: “cilium.io/v2”

kind: CiliumNetworkPolicy

metadata:

name: “frontend-upgrade-carts-egress-dns”

spec:

endpointSelector:

matchLabels:

name: front-end

egress:

– toPorts:

– ports:

– port: “80”

protocol: “TCP”

originatingTLS:

spiffe:

peerIDs:

– spiffe://example.org/carts

– spiffe://example.org/catalogue

dstPort: 443

rules:

http:

– {}

—

apiVersion: “cilium.io/v2”

kind: CiliumNetworkPolicy

metadata:

name: “carts-downgrade-frontend-ingress”

spec:

endpointSelector:

matchLabels:

name: carts

ingress:

– toPorts:

– ports:

– port: “443”

protocol: “TCP”

terminatingTLS:

spiffe:

peerIDs:

– spiffe://example.org/front-end

dstPort: 80

rules:

http:

– {}

—

apiVersion: “cilium.io/v2”

kind: CiliumNetworkPolicy

metadata:

name: ” carts-pod-open-port-80″

spec:

endpointSelector:

matchLabels:

name: carts

ingress:

– toPorts:

– ports:

– port: “80”

protocol: “TCP”

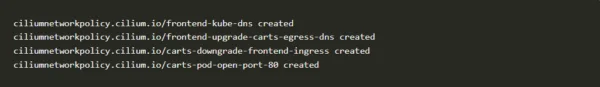

Since we have the policy ready let us go ahead and apply it to the cluster view the result

kubectl apply -f policy.yaml -n sock-shop

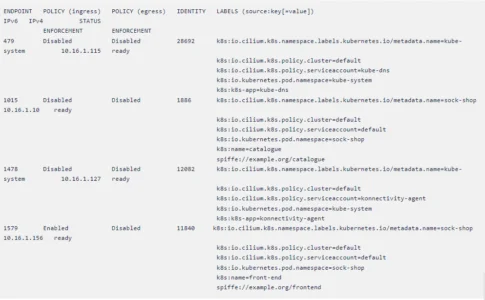

Inspect the front-end and carts endpoints and see whether the policies are enforced. Remember that we utilized an ingress policy for pod front-end and an egress policy for pod carts. We’ll look for the keyword ENABLED in the appropriate columns.

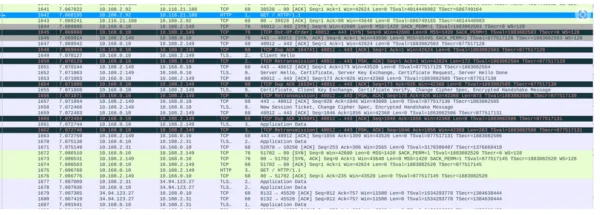

Every step is completed and we have attached SPIFFEE IDs to our pods and wrote a cilium network policy to upgrade as well as downgrade the connections on pods carts and front-end. The only thing remaining for us to do is to verify whether the policies are enforced.

Since our dockerized application is immutable, we’ll deploy an Ubuntu pod with the same label as of front-end pod.

kubectl -n sock-shop run -it frontend-proxy –image = ubuntu –labels = “name=front-end”

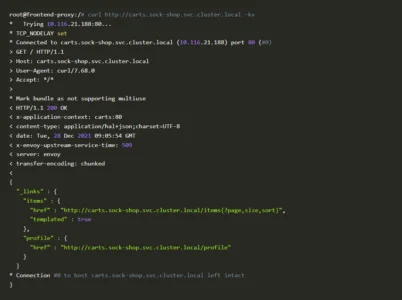

We’ll try to initiate a connection to the carts pod via the carts service from the newly deployed frontend-proxy pod.

The Validation Phase

In order to validate our hypothesis, we’ll need to collect the traffic that’s originating from the frontend-proxy pod and carts pod. Luckily for us, both the frontend-proxy and carts pods are residing on the same node gke-test-28321-default-pool-3956f92a-qz63

kubectl -n sock-shop get pod -o wide

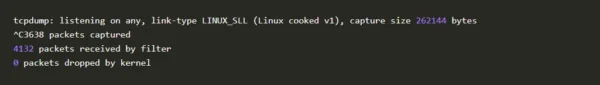

We will use tcpdump to capture the packets on every interface and trigger the curl command once again. Once we receive the response we’ll save the tcpdump file and analyze it using Wireshark

SSH into the node and run tcpdump to capture the packets and save them to a dump file using the commands

_@gke-test-28321-default-pool-3956f92a-qz63:~$ sudo tcpdump -i any -w packet.log

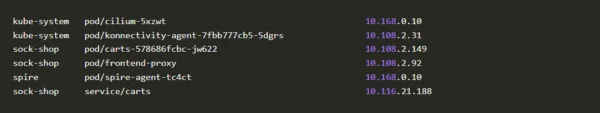

Before decoding the captured packet with Wireshark let’s take a look at the IP’s our application use.

Conclusion

The use of the mTLS capability via Cilum-SPIRE in the context of Kubernetes is straightforward, as you can see from the above walkthrough. This is partly due to the modified cilium-spire agents and also due to SPIRE taking care of the heavy lifting concerning the workload identities management.

Now you can protect your workloads in minutes using AccuKnox, it is available to protect your Kubernetes and other cloud workloads using Kernel Native Primitives such as AppArmor, SELinux, and eBPF.

Let us know if you are seeking additional guidance in planning your cloud security program.