ModelArmor – Strengthen Cloud Security with AccuKnox Solution

An Open-Source Sandbox for Securing Untrusted PyTorch, TensorFlow, NVIDIA, JupyterHub Workloads.

*Open Source powered by KubeArmor

BPF-LSM To Sandbox TensorFlow, PyTorch, CUDA, and NIM Engines.

- Pickle Module Vulnerability: Python’s pickle module poses a significant security risk, potentially allowing arbitrary code execution.

- Adversarial Attacks: Studies reveal that 36% of AI systems face compromised outcomes due to adversarial data manipulation.

- Exposed GPU/CUDA Resources: Unauthorized GPU toolkit access remains a top concern in high-performance computing environments.

- Container Breaches: 80% of organizations using containers face misconfigurations that lead to vulnerabilities.

ModelArmor securely isolates untrusted AI/ML workloads like TensorFlow and PyTorch, protecting them with sandboxing built on KubeArmor. It enforces boundaries using eBPF and LSM, combining strong security with efficient operations.

What Problems does ModelArmor solve?

Secure TensorFlow and PyTorch Environments

Isolated execution for TensorFlow and PyTorch models, preventing unwanted process interference.

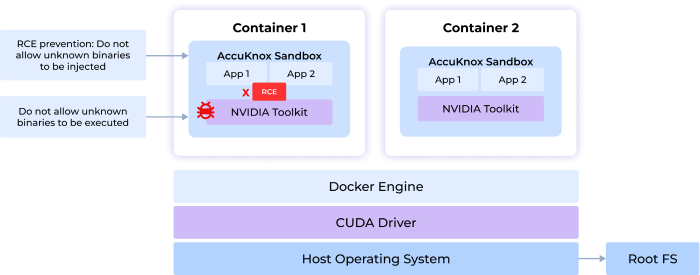

Container Hardening

Prevent Vulnerabilities in sandboxed container environments, safeguarding sensitive configurations and processes.

Sandboxed Application Testing

Ensures untrusted applications and binaries are executed securely, avoiding system-wide risks.

GPU/CUDA Security

Secures NVIDIA GPU toolkits from unauthorized access and malicious execution.

Adds Process and Network Controls

Delivers strict network and process-level policies for enhanced isolation and observability.

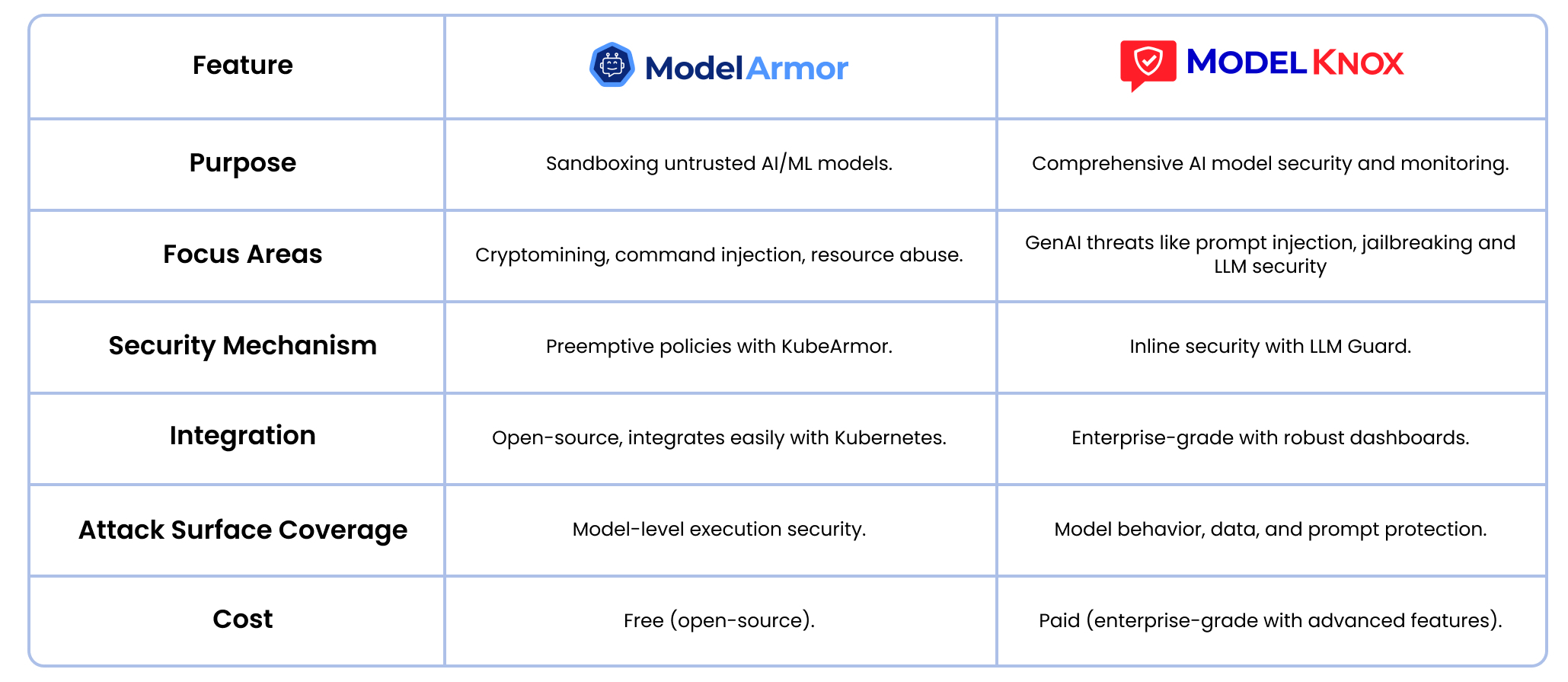

ModelArmor (Open Source) vs ModelKnox (Enterprise) Comparison

Use Cases and Unique Differentiation

- Mitigating Pickle Code Injection

Prevents exploitation of Python’s pickle module by isolating execution and stopping arbitrary code injection. - Defending Against Adversarial Attacks

Provides input validation, pre-analysis, and isolation to strengthen defenses against adversarial manipulations in AI workflows. - Securing NVIDIA Microservices

Ensures container hardening, GPU/CUDA isolation, and robust observability to protect NVIDIA’s microservices.

Origin Story and System Design

- Protecting Sensitive Data in AI Systems: Prevents exploitation of Python’s pickle module by isolating execution and stopping arbitrary code injection.

- Sandboxing Untrusted Deployments: Isolates untrustworthy inference engines or models to ensure safe execution and prevent unauthorized access.

- Zero-Trust Security and Auditing: Integrates container hardening, detailed auditing, and reporting to maintain security and transparency.

- NVIDIA NIM-Security: Combines GPU-enabled secure execution with robust network and process controls for TensorFlow, PyTorch, and CUDA applications.

Unique Differentiator

- Prevents unauthorized access to NVIDIA GPU toolkits.

- Keeps TensorFlow and PyTorch environments tightly separated.

- Stops risky Python pickle injections using sandboxing.

- Guards against adversarial attacks with input checks and isolation.

- Provides full process and network monitoring for secure operations.

ModelArmor Use Cases

Pickle Code Injection PoC

The Pickle Code Injection Proof of Concept (PoC) demonstrates the security vulnerabilities in Python’s pickle module, which can be exploited to execute arbitrary code during deserialization.

Adversarial Attacks on Deep Learning Models (Caldera)

Adversarial attacks exploit vulnerabilities in AI systems by subtly altering input data to mislead the model into incorrect predictions or decisions.

PyTorch App Deployment with KubeArmor

Steps to deploy a PyTorch application on Kubernetes and enhance its security using KubeArmor policies.

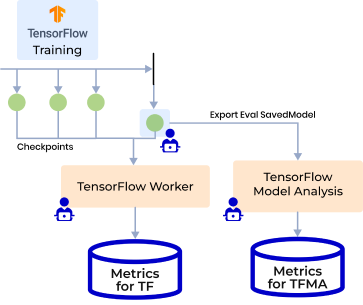

FGSM Attack on a TensorFlow Model

Keras Inject Attack and Apply Policies

Trusted By Global Innovators