10 Kubernetes Security Best Practices [2024 Update]

Learn about the effective organization and administration of Kubernetes with our handpicked list of Kubernetes security best practices. Dive into container orchestration excellence and streamline your Kubernetes journey. We include common mistakes to avoid as well as configuration examples for better understanding.

Reading Time: 9 minutes

Table of Contents

Kubernetes, the open-source container orchestration powerhouse, is the linchpin in today’s world of containerization. With the proliferation of thousands of containers on production servers, seamless management becomes paramount. We increase the risks to our infrastructure and apps when we implement Kubernetes. Security professionals, system administrators, and software developers can follow this to plan out the environments. In the future, we anticipate that this will be supported by data gathered from companies with varied levels of complexity and maturity. It’s not just about deploying and scaling containers; it’s about orchestrating them with finesse.

💡TL;DR

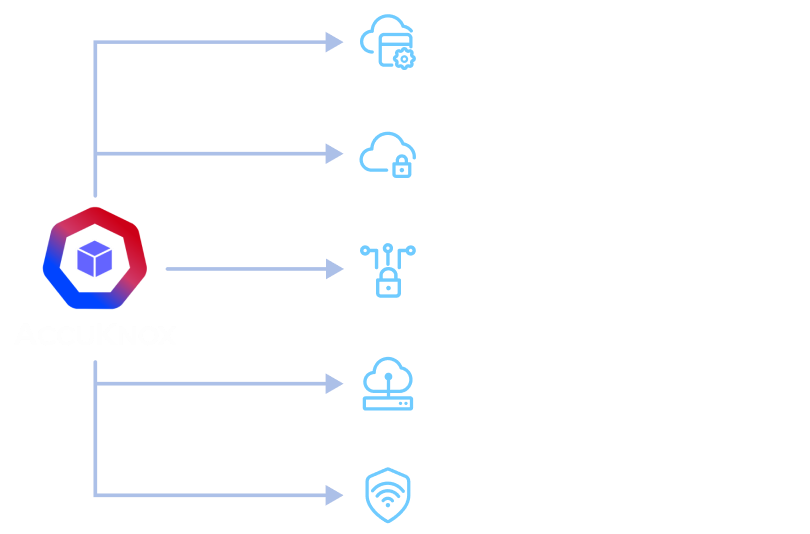

To achieve the highest organizational security, it is essential to follow these best practices for container orchestration in Kubernetes.

The top 10 recommended best practices are:

- Build Small Container Images

- Grant Safe Levels of Access (RBAC)

- Set resource requests and limits.

- Kubernetes Termination

- Always fetch updates. The latest is the greatest.

- Leverage Namespaces

- Track and Organize with Labels

- Audit Logs Save the Day!

- Apply Affinity Rules (Node/Pod)

- Use livenessProbe and readinessProbe

AccuKnox’s solutions primarily use the open-source KubeArmor CNCF project, which provides inline mitigation, streamlines Linux security modules, and prevents malicious activity. It bridges the gap between BPF-LSM and native Kubernetes Pod Security Context, improving predictability and simplifying multi-cloud compatibility.

In this blog, let us discuss the must-follow Kubernetes security best practices for better container orchestration.

These recommendations will help to manage Kubernetes environments effectively but they should also be complemented with most common Kubernetes security mistakes to avoid for achieving the highest organizational security.

Build Small Container Images

Smaller container pictures are preferred since they use less storage space and fetch and construct images more quickly. Security assaults are also less likely to target small photos.

Utilizing a lower base image and a builder pattern are two ways to minimize container size. For instance, the NodeJS alpine image is considerably smaller (28 MB) than the most recent NodeJS base image (345 MB). Always use smaller base images and only include the dependencies your program actually needs. When using a builder design, code is created in one container and then packaged with no additional compilers or tools in a final container.

When working with multiple components, you can pull particular layers for each component using different FROM statements in a single Dockerfile, which will reduce the container size even more. Also, make sure to stage config files to a version control as recommended by the official K8s docs.

Grant Safe Levels of Access (RBAC)

Based on Redhat’s 2022 survey 55% of respondents reported that security issues delayed the release of applications; 59% listed security as a major barrier to using Kubernetes and containers; and 31% reported revenue or customer loss as a result of security issues.

Source: Redhat 2022 K8s Survey

- Key access parameters to set include the maximum concurrent sessions per IP address, maximum requests per user per second, minute, and hour, maximum request size, and hostname and path restrictions. These measures help safeguard the cluster from DDoS attacks.

- To manage access for the developer and DevOps engineers within a Kubernetes cluster, leverage Kubernetes’ role-based access control (RBAC) feature.

- RBAC can be implemented using Roles and ClusterRoles to define specific access profiles.

- Rancher provides RBAC functionality by default, or you can utilize open-source rbac-managers to assist with the syntax and configuration.

- Ensuring the security of your Kubernetes cluster is of utmost importance.

- Properly configuring cluster access is a key aspect of security.

Learn how AccuKnox solutions offer RBAC with strict GRC rulesets.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cluster-iam-role

rules:

- apiGroups: [""]

resources: ["pods"]

verbs: ["get", "list"]

SSH keys, passwords, and other private information are kept in Kubernetes Secrets. They become git accessible when they are checked out of the IaC repository. Organizations are adopting DevSecOps more frequently as a result of its significance.

Set Resource Requests and Limits

In production clusters with limited resources, application performance suffers. This leads to outages with business implications. Kubernetes offers resource management techniques to address this challenge, specifically resource requests and limits. Resource requests and limits prevent a single pod from monopolizing CPU and memory. Cluster reliability is naturally enhanced as a result. Requests never exceed limits, and containers can have both memory and CPU requests and limits specified. Resource limits define the maximum resource consumption for a container, while resource requests specify the minimum requirements. Failure to define resource constraints can lead to:

- Cluster performance issues

- Increased costs

- Node crashes due to excessive resource usage by pods.

Properly configuring resource requests and limits to maintain optimal Kubernetes cluster operation and application reliability. Take a look at this illustration of setting a limit to 1000 millicores CPU and 128 mebibytes, and setting a quota for requests to 400 millicores CPU and 128 mebibytes.

containers:

- name: accuknox-test

image: ubuntu

resources:

requests:

memory: "128Mi"

cpu: "400m"

limits:

memory: "256Mi"

cpu: "100m"Kubernetes Termination

When a pod is no longer required, Kubernetes effectively handles its termination. The chosen pods enter a termination state where no traffic is routed to them when this procedure is started by a command or an API request.

Here is how Kubernetes handles pod termination:

- Graceful Termination: Kubernetes automatically starts graceful termination with a 30-second grace period. The pods are sent a SIGTERM signal at this time, enabling a gentle shutdown. When this signal is received, it’s important to keep track of it and take action inside your program, such as shutting down connections and storing crucial information.

- Forced Termination: If pods continue to run after the grace period, Kubernetes takes more proactive action by forcibly terminating the pods by issuing a SIGKILL signal. This guarantees effective resource release.

- Cleaning up: After terminating them, Kubernetes removes these pods from the master machine’s API server, maintaining a tidy and effective cluster environment.

The 12factorapp guidelines should be considered while drafting any app lifecycle management.

Why It Matters

Efficient pod termination is extremely important for Kubernetes cluster health and performance, preventing data corruption and smooth transitions during updates or scaling operations, and enabling informed resource management and application reliability decisions.

Consider a database that is being operated by a pod. To make sure that active connections are correctly terminated and data is retained, you might wish to gracefully end a pod when you decide to scale down or upgrade the application.

Let’s imagine that, because of their complicated operations, your pods always take longer than 30 seconds to gracefully end. If you want extra time for cleanup in this situation, you may change the grace period to 45 or 60 seconds.

apiVersion: v1

kind: Pod

metadata:

name: container10

spec:

containers:

- image: ubuntu

name: container10

terminationGracePeriodSeconds: 60Always Fetch Updates, Latest is the Greatest

- It is advised to keep the Kubernetes cluster updated with the most recent stable version.

- Unfamiliarity with new features, a lack of support, or probable compatibility problems are a few potential worries.

- A common rule to follow is to update to the most recent stable version.

- Security and performance upgrades are normally included in the most recent stable version.

- Additionally, it gives users access to greater vendor and community assistance.

- By using this best practice, possible problems with service delivery may be avoided.

- Updating gets easier when utilizing Kubernetes with a cloud provider.

Not hopping on the latest version might leave you exposed to a variety of OWASP Kubernetes Top Ten Vulnerabilities

Leverage Namespaces

Three preset namespaces are available in Kubernetes:

- Default

- Kube-System

- Kube-Public

Namespaces offers logical division inside a cluster, enabling various projects or teams to work together without problems. The advantage is that it eliminates team interference and promotes parallel work in the same cluster. The default namespace could be adequate for small teams with a modest number of microservices (for example, 5–10). However, different namespaces are suited for better administration in larger companies or teams that scale quickly. A cluster’s several namespaces enable communication while providing logical separation. Failure to utilize distinct namespaces may result in accidental interference with the work of other teams. To properly arrange services, it is advised to define and utilize various namespaces.

Confused regarding policy specifications? Refer to KubeArmor’s pre-packaged policies and outlines here.

Here’s how you can create resources inside the namespace:

apiVersion: v2

kind: Pod

metadata:

name: pod110

namespace: test-env

labels:

image: pod110

spec:

containers:

- name: prod110

Image: ubuntuTrack and Organize with Labels

The management of various services, pods, and resources may get complicated as your Kubernetes installations grow. It can be difficult to explain how these resources interact and how they should be copied, scaled, and maintained. These issues are dealt with and things inside the Kubernetes UI are organized using labels, which are key-value pairs. To illustrate how various objects and resources interact inside the cluster, names like app: kube-app, phase: test, and role: front-end are used.

For improved control and organization, labels are fantastic.

apiVersion: v2

kind: Pod

metadata:

name: my-pod

labels:

environment: dev-env

team: test02

spec:

containers:

- name: test02

image: "Ubuntu"

resources:

limits:

cpu: 2By consistently labeling the resources and objects, Kubernetes production gets much more organized

Audit Logs Save the Day!

Auditing of logs finds risks in the Kubernetes cluster. It addresses:

- What happened?

- Why has it happened?

- Who made it happen?

In a log file called audit.log, all the information connected to the requests performed to kube-apiserver is recorded. This log file has a JSON structure.

Start the kube-apiserver with these options to enable audit logging:

In Kubernetes, by default, the audit log is stored in /var/log/audit.log and, the audit policy is present at /etc/kubernetes/audit-policy.yaml

To enable the audit logging, start the kube-apiserver with these parameters:

--audit-policy-file=/etc/kubernetes/audit-policy.yaml --audit-log-path=/var/log/audit.log

Here is a sample audit.log file configured for logging changes in the pods:

apiVersion: audit.k8s.io/v3

kind: Policy

omitStages:

- "RequestReceived"

rules:

- level: RequestResponse

resources:

- group: ""

resources: ["pods"]

- level: Metadata

resources:

- group: ""

resources: ["pods/log", "pods/status"]In the event that a Kubernetes cluster problem arises, you can always backtrack and review the audit logs. With it, the cluster can be reverted back to its original condition. Here is how process whitelisting is done in policies. Zero Trust is the best approach for this.

Apply Affinity Rules (Node/Pod)

Kubernetes has two mechanisms—Pod and Node affinity—for more effectively connecting pods and nodes. The use of these methods is advised for improved performance. In a Kubernetes cluster, pods are scheduled based on predetermined parameters, with the appropriate node chosen and assigned based on the pods’ requirements.

apiVersion: v1

kind: Pod

metadata:

name: ubuntu

spec:

affinity:

nodeAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 2

preference:

matchExpressions:

- key: disktype

operator: In

values:

- ssd

containers:

- name: ubuntu

image: ubuntu

imagePullPolicy: IfNotPresentWhen multiple pods are scheduled on one node to minimize latency and enhance performance, pod affinity enables high availability by keeping pods on separate nodes.

apiVersion: v1

kind: Pod

metadata:

name: ubuntu-pod

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security

operator: In

values:

- S1

topologyKey: failure-domain.beta.kubernetes.io/zone

containers:

- name: ubuntu-pod

image: ubuntuYour cluster’s workload analysis will let you choose which affinity technique to employ. Hack-proof your K8s clusters with our expert tips and hands-on demo.

Use livenessProbe and readinessProbe

Health checks are essential in Kubernetes for keeping track of the status of applications. They come in two varieties:

- Readiness probes: Check an application’s readiness to provide traffic before it gets user requests. Until a readiness probe succeeds, Kubernetes halts traffic to the pod.

- Liveness probes: Determine if a program is still active or whether it has terminated. Kubernetes replaces the pod if it fails.

These tests use three different sorts of probes: HTTP, Command, and TCP. Kubernetes pings a specified path on an application’s internal HTTP server during an HTTP probe. A positive answer implies a sound application. Kubernetes classifies an application as healthy or unhealthy based on whether it receives an HTTP response from a healthy or server route. For a more guided walkthrough, refer to the official K8s recommendations.

The example below:

apiVersion: v3

kind: Pod

metadata:

name: container201

spec:

containers:

- image: ubuntu

name: container201

livenessProbe:

httpGet:

path: /staginghealth

port: 8081Conclusion

The majority of AccuKnox’s solutions rely on the open-source KubeArmor CNCF project. By providing inline mitigation, streamlining Linux security modules, and thwarting malicious activity, the open-source CNCF utility KubeArmor enhances adherence to the top 10 Kubernetes best practices.

It fills the gap between the BPF-LSM and native Kubernetes Pod Security Context, increasing predictability and making multi-cloud compatibility simpler. Behaviors are limited, security regulations are upheld in real-time, and container communication is controlled by KubeArmor. Here is how we achieve it at AccuKnox.

| Feature / Use Case | KubeArmor’s Role |

| Inline Mitigation | Provides inline mitigation to reduce the attack surface on pods, containers, and virtual machines. |

| Simplification of LSM Complexities | Simplifies the intricacies of Linux Security Modules (LSMs) for policy enforcement. |

| Proactive Security | Prevents malicious activities before they occur, taking a proactive security stance. |

| Bridging Pod Security Context Gaps | Bridges gaps between native Kubernetes Pod Security Context and BPF-LSM, improving predictability. |

| Multi-Cloud Compatibility | Simplifies multi-cloud compatibility by bridging gaps between different cloud providers. |

| Behavior Restriction | Restricts specific behaviors within workloads, including process activities and resource usage. |

| Runtime Policy Enforcement | Enforces security policies in real-time based on container or workload identities. |

| Policy Description Simplification | Simplifies policy descriptions, making it easier to manage LSM complexities. |

| Network Security | Regulates communication between containers using network system call controls, enhancing network security. |

| Kubernetes-Native Integration | Integrates seamlessly with Kubernetes metadata, enabling Kubernetes-native security enforcement. |

| 5G Zero-Trust Security | Collaborates with the 5G Open Innovation Lab to deliver 5G zero-trust security in emerging Kubernetes applications. |

| Commitment to Security and Innovation | Ensures container orchestration environments are fortified against emerging challenges with a dedication to security and innovation. |

All Advanced Attacks are Runtime Attacks

Zero Trust Security

Code to Cloud

AppSec + CloudSec

Prevent attacks before they happen

Schedule 1:1 Demo