![]()

Achieve Multi-Cloud

AI & LLM Security

Against Top Modern Attack Vectors

All Things AI Security From

Development to Deployment

Model Security

Agentless and quick setup

- Vulnerability Scanning

- Supply Chain Hardening

- Observability into prompt usage

- Model Hijacking Protection

Dataset Security

Defense against data extraction

- Data Privacy Scanning

- Secure Data Access

- Data poisoning protection

- Secure Data Pipelines

Application Security

No Jailbreaking and Prompt Injection

- AI Red Teaming

- Secure AI Packaging

- Development environment hardening (Jupyter Notebook)

- Application security testing

Container Security

Runtime Security for Containers

- AI Workload Security

- Secure AI Inference

- Securing NIM Microservices

- Container image scanning

The ModelKnox dashboard is simple and intuitive, provides real-time visibility of potential security risks: prompt injection, model architecture vulnerability, and misconfigurations that expose data breaches or policy breaches.

ModelKnox Features

Data Security

- Prevent dataset tampering

- Find secrets in datasets

- Protect dataset access

- Secure data storage

Training Security

- Prevent model backdooring

- Ensure model provenance

- Protect training pipelines

- Secure artifact access

Model Security

- Conduct AI red teaming

- Enforce safety policies

- Ensure AI compliance

- Verify supply chain

Application Security

- Package models securely

- Validate application security

- Manage security posture

- Protect AI workloads

Runtime Security

- Observe runtime security

- Ensure safe consumption

- Ensure secure inference

- Respond to incidents

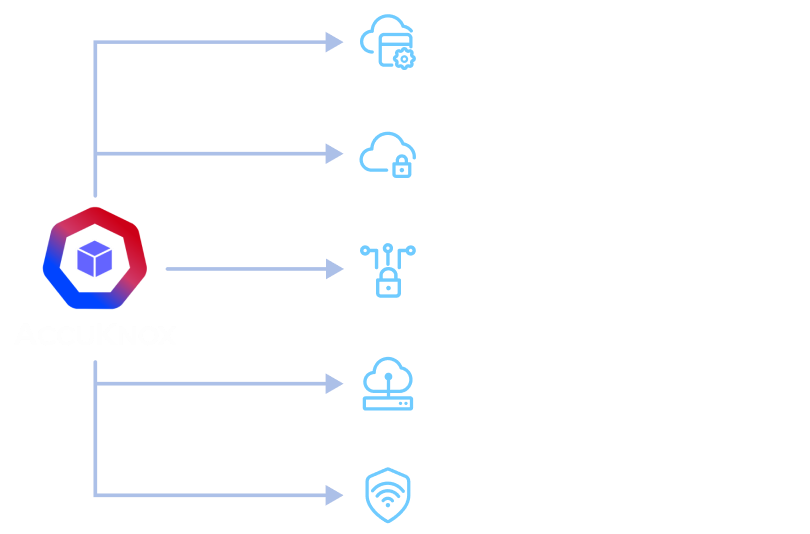

Achieve multi-cloud AI Workload and LLM Security

One Platform to Secure

All AI Workloads

Siloed Tools Mean Less Context and Slower Response

Pre-Development Model Security Scan

Multi-Cloud Asset Discovery for AI Applications

AI Application Security Assessment

Automated Triage for Model Security Findings

Pre-Development Model Security Scan

Identify vulnerabilities in AI models before application development. Scan for risks like insecure architectures, data leakage, or adversarial attack susceptibility.

Use Case: Detect if a model exposes sensitive data during inference or is prone to exploitation.

Multi-Cloud Asset Discovery for AI Applications

Discover AI/LLM/ML assets across multi-cloud environments. Automatically identify models, datasets, and compute resources linked to applications.

Use Case: Locate all LLM instances and connected datasets deployed across AWS, Azure, and GCP.

AI Application Security Assessment

Detect security issues in AI applications, including cloud infrastructure and model-level risks. Find misconfigured storage, insecure APIs, or vulnerabilities like prompt injection.

Use Case: Identify exposed S3 buckets or models vulnerable to jailbreaking.

Automated Triage for Model Security Findings

Automate the handling of bulk security findings. Create rules to classify, prioritize, and resolve issues like misconfigurations or unauthorized access.

Use Case: Flag high-risk problems such as unencrypted datasets for immediate action.

Defend Against AI Attack Vectors

Jailbreaking

Prompt injection

Backdoor and data poisoning

Adversarial inputs

Insecure output handling

Data extraction and privacy

Data reconstruction

Denial of service

Watermarking and evasion

Model theft

ModelKnox Use Cases

Did you know – AI attacks are headlines every other week?

Key Differentiators

| Criteria |  |

Cloud AI-SPM (Tool X) |

End-to-end security (Tool Y) |

AI red teaming (Tool Z) |

|||||

|---|---|---|---|---|---|---|---|---|---|

| AI-SPM | |||||||||

| Application Security | |||||||||

| Workload Security | |||||||||

| Safety Guardrails | |||||||||

| Security Monitoring | |||||||||

Powered by Partners

Need ModelKnox Advice on Your Cloud Security?

Join Waitlist for Early Access

All Advanced Attacks are Runtime Attacks

Zero Trust Security

Code to Cloud

AppSec + CloudSec

Prevent attacks before they happen

Schedule 1:1 Demo