Chatbot leaks customer data – Defend with AccuKnox AI-SPM AI security

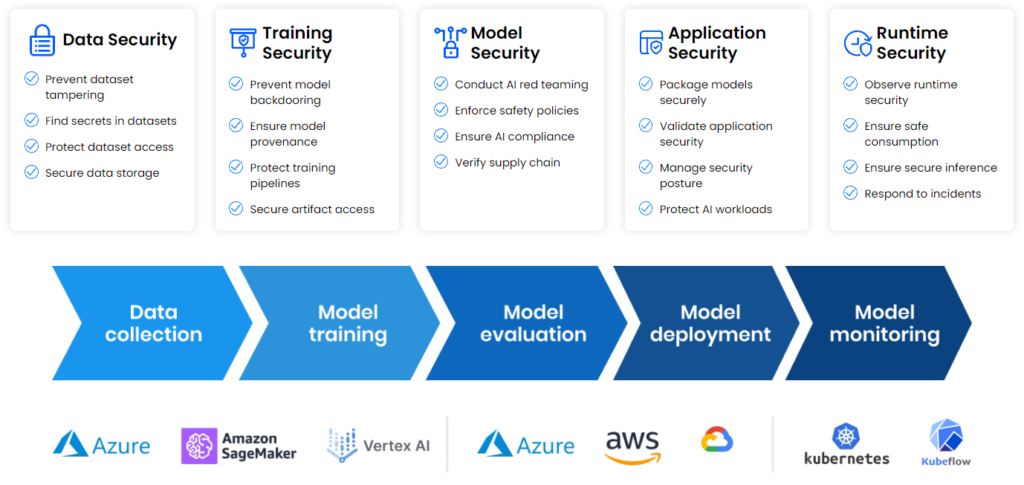

Technical exploration of PII protection mechanisms in machine learning workloads, focusing on automated detection, runtime prevention, and compliance challenges in modern AI infrastructure along with how AccuKnox AI-SPM can help in preventing this.

Reading Time: 5 minutes

The WotNot PII Leak

Recently, Indian AI startup WotNot has been exposed in a database containing sensitive personal records, including passports, medical records, resumes, and other documents. The leak, discovered by Cybernews researchers, presents a significant security and privacy threat to affected individuals. The data included passports, medical records, resumes, and travel itineraries. Cybercriminals may use the information to open fraudulent financial accounts, file false insurance claims, launch spear phishing attacks, and other social engineering schemes.

The company claims that the bucket was used by free-tier users and that the bucket was designated for users who were part of the free plan. WotNot ensures that private instances are provided to ensure security and compliance standards are strictly adhered to. Such risks often stem from insufficient oversight of datasets and models, unregulated workflows, and a lack of visibility into the training and inference processes.

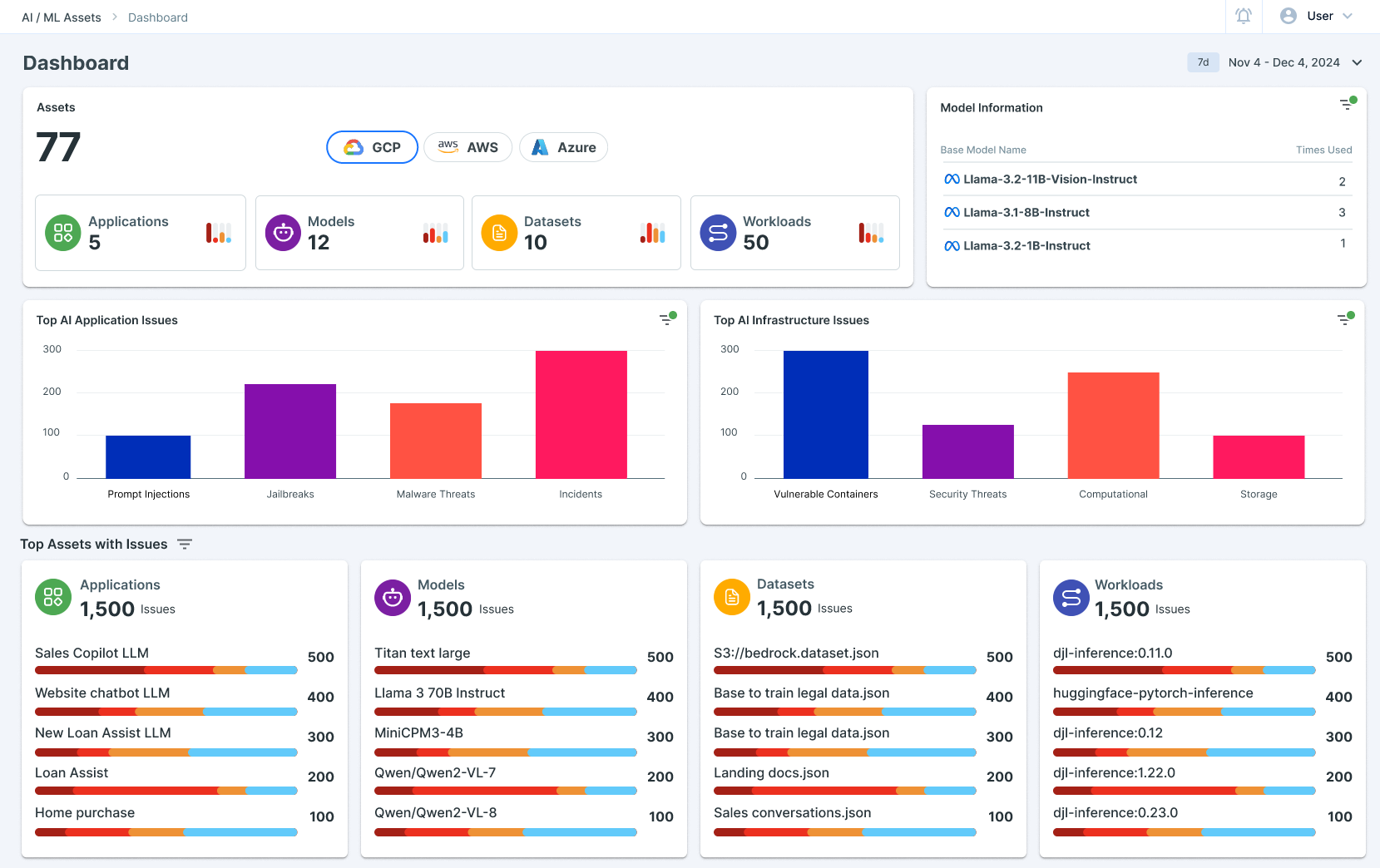

By contrast, with AccuKnox AI-SPM comprehensive dashboard and security features, organizations can proactively safeguard their AI/ML ecosystems.

The Need for PII Protection

In the era of Generative AI, Large Language Models (LLMs) are becoming ubiquitous in modern applications, making the protection of sensitive information more critical than ever. As digital identities grow increasingly complex, the challenge of detecting and anonymizing Personally Identifiable Information (PII) has emerged as a paramount concern for organizations.

Key Challenges in PII Protection:

- PII serves as the core of an individual’s digital identity

- Global regulations mandate rigorous protection mechanisms

- Uncontrolled PII can proliferate across multiple platforms, increasing breach risks

AccuKnox AI-SPM, AccuKnox’s advanced AI/ML Workload Security solution, addresses these challenges head-on by integrating LLM Guard’s sophisticated PII detection capabilities. It provides real-time monitoring, data validation, and compliance enforcement to prevent PII data leaks. This powerful combination acts as a digital sentinel, scrutinizing prompts in real-time to identify and anonymize sensitive information across multiple categories:

- Credit card numbers

- Personal names

- Phone numbers

- Email addresses

- IP addresses

- Social security numbers

- Cryptocurrency wallet addresses

PII Leakage Prevention with AccuKnox AI-SPM – A Walkthrough

Step 1: Access the All Models View

The first step to securing your models with AccuKnox AI-SPM is accessing the “All Models” view. This centralized dashboard provides an overview of all AI/ML models deployed across your organization. Key metrics like model status, last training date, and dataset provenance are readily visible.

For example, imagine managing a Sales Copilot application similar to WotNot. From the dashboard, you can quickly identify which models handle customer data and flag any potential risks.

Benefits:

- Centralized visibility of all models

- Quick identification of high-risk models

- Actionable insights into data lineage

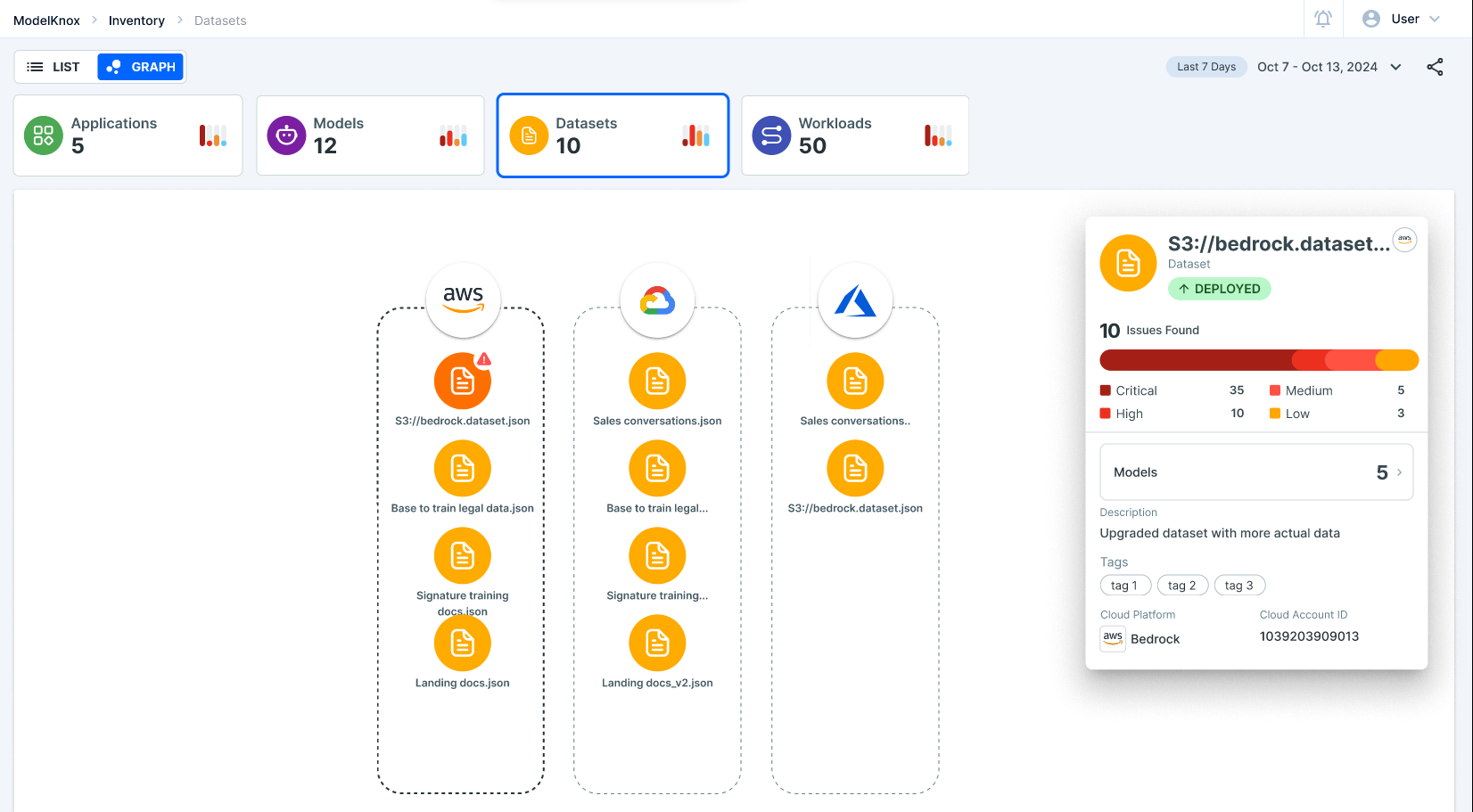

Step 2: Drill Down into Individual Models

From the “All Models” view, select an individual model to access detailed insights. Here, you can review associated datasets, recent training activities, and any flagged anomalies.

In the case of Sales Copilot, AccuKnox AI-SPM dashboard would display datasets containing PII and alert you to any misconfigurations or non-compliance issues.

Actions:

- Validate datasets for compliance with GDPR, HIPAA, and other regulations

- Monitor training data for unauthorized PII usage

- Review data pre-processing pipelines

Benefits:

- Early detection of missteps in data handling

- Comprehensive data validation

Step 3: Explore Workloads and Applications

AccuKnox AI-SPM platform also enables users to track workloads and applications connected to specific models. This feature ensures that no unauthorized application is accessing sensitive data.

By examining the workloads connected to Sales Copilot, users can isolate access points and ensure that only authorized applications interact with sensitive data.

Actions:

- Audit workloads for unauthorized access

- Implement role-based access controls (RBAC)

Benefits:

- Enhanced control over data workflows

- Reduced risk of unauthorized data access

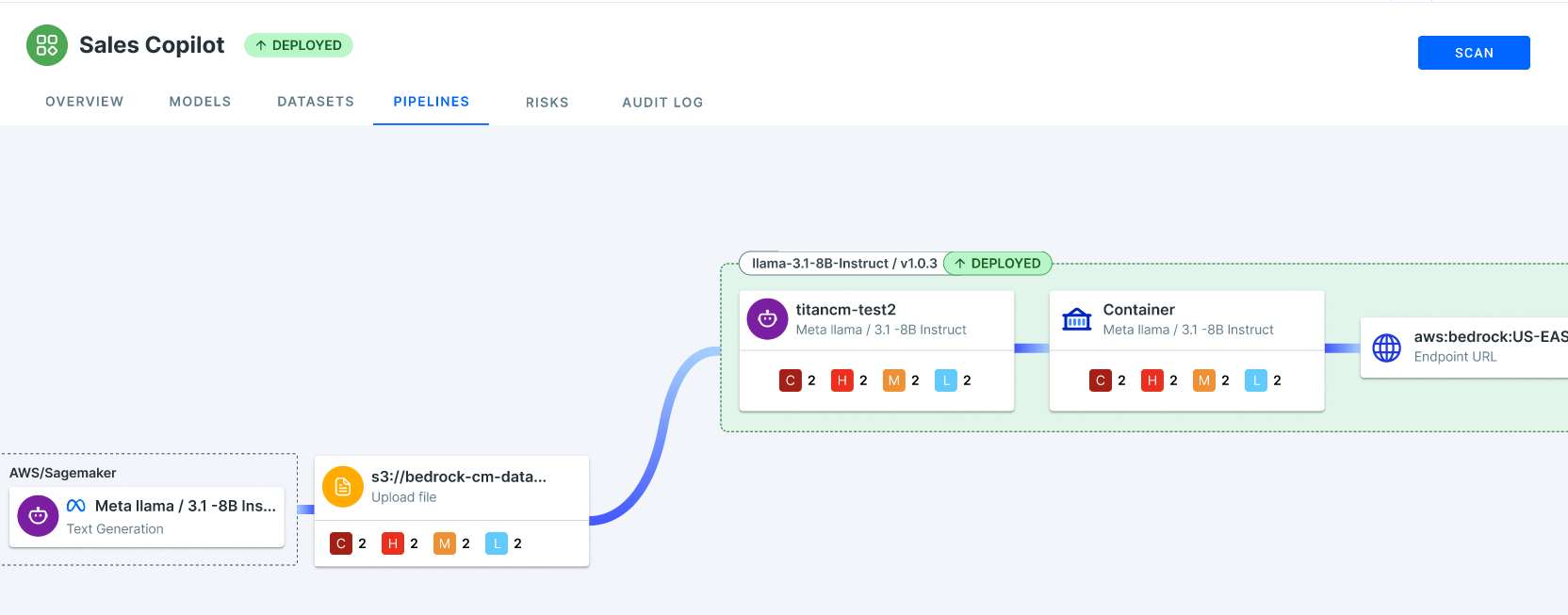

Step 4: Analyze Pipelines and Identify Possible Scenarios of Data Leakage

The pipelines section within AccuKnox AI-SPM allows users to review and manage the flow of data during training and inference. This is a critical step in identifying and addressing vulnerabilities.

AccuKnox AI-SPM dashboard can simulate a breach scenario like WotNot’s. It shows exactly where the PII was exposed—whether during data ingestion, pre-processing, or inference—and provides remediation steps.

Actions:

- Trace the flow of data across pipelines

- Automate anomaly detection and response

Benefits:

- Reduced response time to potential breaches

- Improved pipeline security

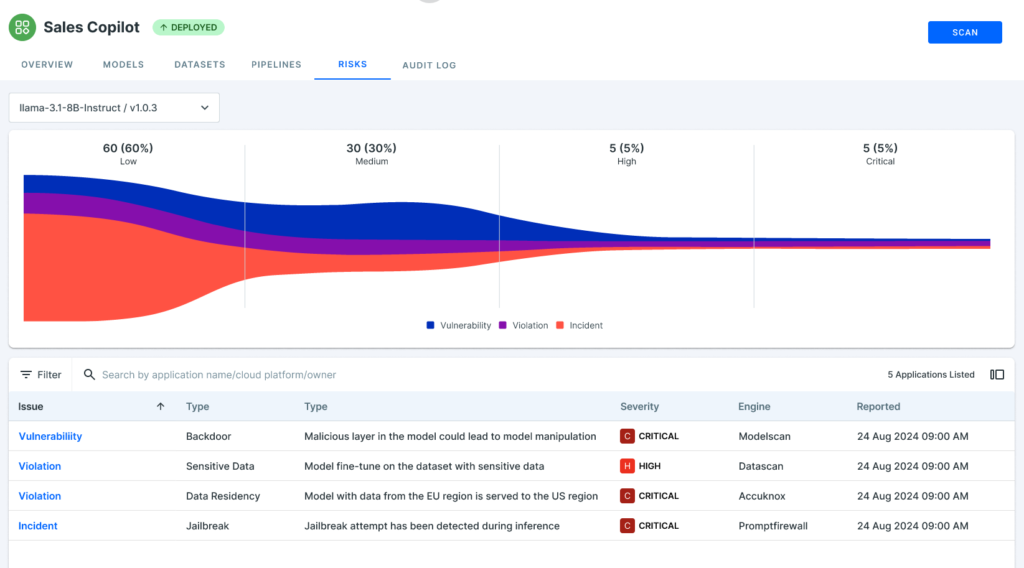

Step 5: Leverage Risk Assessment for Preventive Features

Finally, LLM Guard actively monitors the data ingested by large language models. It employs advanced techniques like differential privacy, token-level validation, and real-time alerts to ensure that no sensitive information is leaked.

With Sales Copilot, for example, LLM Guard would detect and block attempts to ingest unauthorized PII, flag the issue, and prevent the model from completing its task until compliance is restored.

Actions:

- Enable real-time data validation

- Set up alerts for policy violations

Benefits:

- Real-time prevention of PII data leaks

- Automatic enforcement of compliance policies

How AccuKnox AI-SPM Prevents the WotNot Incident

Had WotNot leveraged AccuKnox AI-SPM, the breach could have been avoided. Here’s how:

- Dataset Validation: LLM Guard would have flagged the dataset containing PII during ingestion.

- Pipeline Monitoring: Unauthorized access points in the data pipeline would have been isolated and neutralized.

- Real-Time Alerts: AccuKnox AI-SPM would have sent instant alerts about non-compliance, allowing WotNot’s team to act before the leak occurred.

PII data leakage can result in legal penalties, loss of customer trust, and significant reputational damage. With platforms like AccuKnox AI-SPM, organizations can not only secure their AI/ML models but also build customer confidence by demonstrating their commitment to data protection.

Takeaways

The WotNot data leak underscores the importance of robust security measures for AI/ML models. AccuKnox AI-SPM LLM Guard, with its comprehensive dashboard and real-time monitoring, provides organizations with the tools they need to prevent PII data leakage. By following the step-by-step guide outlined in this blog, users can effectively navigate the AccuKnox AI-SPM platform, secure their models, and ensure compliance with global data protection standards.

In the evolving AI landscape, proactive security is not optional—it’s essential. AccuKnox AI-SPM empowers businesses to protect their data, safeguard their customers, and thrive in a secure AI-driven future. Read more about how you can secure your AI workloads with AccuKnox.

You can protect your workloads in minutes using AccuKnox, It is available to protect your Kubernetes and other cloud workloads using Kernel Native Primitives such as AppArmor, SELinux, and eBPF. Let us know if you are seeking additional guidance in planning your cloud security program.

Get a LIVE Tour

Ready for a personalized security assessment?

“Choosing AccuKnox was driven by opensource KubeArmor’s novel use of eBPF and LSM technologies, delivering runtime security”

Golan Ben-Oni

Chief Information Officer

“At Prudent, we advocate for a comprehensive end-to-end methodology in application and cloud security. AccuKnox excelled in all areas in our in depth evaluation.”

Manoj Kern

CIO

“Tible is committed to delivering comprehensive security, compliance, and governance for all of its stakeholders.”

Merijn Boom

Managing Director